Training large, cutting-edge AI models, especially massive generative AI models, demands extraordinary computational power. That power now comes from AI supercomputing platforms. These integrated, high-performance environments are quickly becoming the essential infrastructure for both industry and science. This guide explores the global race to build these platforms, how they accelerate innovation, and what your organization must do to adopt them responsibly.

What Are AI Supercomputing Platforms?

AI supercomputing platforms are purpose-built environments designed for extreme-scale AI and data-intensive workloads. They combine heterogeneous compute, ultra-fast networking, and optimized software. This potent mix delivers leadership-class performance for training and deploying the most demanding generative AI applications.

Key Components

- Heterogeneous Compute: These systems use specialized accelerators (like GPUs and AI ASICs) alongside general-purpose CPUs. They are highly tuned for parallelism, mixed precision calculations, and massive memory bandwidth.

- High-Speed Fabrics: Ultra-fast interconnects, including InfiniBand and advanced Ethernet, connect thousands of accelerators and nodes in a fashion that cuts latency dramatically while assuring massive data throughput.

- Full-stack software: Optimized software handles everything from cluster management to workload scheduling. AI libraries, security features, and monitoring tools enable reliable operations at scale.

Why it matters: The U.S. Department of Energy (DOE) notes that exascale-class supercomputers unlock breakthroughs across many fields. These systems are especially well-suited for machine learning and artificial intelligence research.

The Global Race for AI Supercomputing Capacity

Nations are aggressively investing in AI supercomputing platforms to secure economic and scientific leadership. This capacity is central to national research agendas worldwide.

United States: DOE, NIST, and NSF

The U.S. has exascale systems like Frontier, Aurora, and El Capitan. The DOE emphasizes these systems excel at using AI and machine learning for scientific research. New partnerships have been announced to build the lab complex’s largest AI supercomputers at Argonne. These systems connect directly to scientific instruments and data assets, accelerating discovery. Policy guidance, including NIST’s AI Risk Management Framework, helps organizations mitigate risks when operating these advanced platforms.

Europe: EuroHPC Joint Undertaking

Europe is also operating leading systems like JUPITER and LUMI. The EuroHPC Joint Undertaking is deploying AI Factories, hubs focused on AI-optimized supercomputers. These centers support trustworthy generative AI for health, climate, manufacturing, and more. EuroHPC runs special calls to provide compute access for large AI models, fostering global initiatives like the Trillion Parameter Consortium.

United Kingdom: National Compute Strategy

The UK is investing heavily to scale its national compute capacity. This includes prioritizing access for crucial public-interest projects. The goal is to reduce reliance on foreign infrastructure and position the UK as an “AI maker.” The AI Playbook provides practical guidance on safe AI adoption. This complements the responsible construction and use of AI supercomputing platforms.

How AI Supercomputing Platforms Accelerate Generative AI

Generative AI models, including large language models (LLMs), require immense compute power. This power is needed for pre-training, fine-tuning, and serving. AI supercomputing platforms are uniquely capable of meeting these extreme demands.

- Faster Training and Inference: Rack-scale architectures and tightly coupled accelerators enable efficient training of models with trillions of parameters. They also support real-time inference at production scale.

- Scalable Operations: Vendor reference architectures and platform tools streamline deployment. They also manage scheduling, monitoring, and optimization for large fleets of AI models.

- Responsible Evaluation: Science-based testing is crucial. NIST’s GenAI evaluations provide frameworks to measure performance, reliability, and trustworthiness as models scale up.

Scientific Breakthroughs Enabled by AI Supercomputing

These platforms are not just for technology; they are catalysts for scientific discovery.

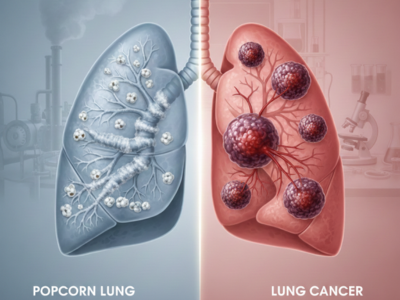

- Drug Discovery and Materials: Exascale hardware and AI together accelerate molecular simulation and protein design. They facilitate materials discovery far beyond classical computational limits.

- Climate and Energy: Supercomputers simulate highly complex climate systems and optimize energy research. AI powerfully augments this modeling to improve both accuracy and speed.

- Open Science: Programs like the NSF’s large-scale infrastructure investments expand access for academia and industry. This democratizes the use of AI supercomputing platforms, catalyzing breakthroughs across many disciplines.

Governance, Safety, and Compliance: Building Trust

Adopting AI supercomputing platforms requires a robust and trustworthy governance posture.

- Risk Management: Use NIST’s AI RMF Generative AI Profile to identify, assess, and mitigate risks across the AI model lifecycle.

- Secure Development: Apply NIST SP 800-218A guidance to integrate secure software practices for all foundation models. This is essential for compliance and safety.

- Data Sovereignty and Access: Align with national strategies, such as the UK Compute Roadmap and EuroHPC policies. This ensures responsible, sovereign compute and equitable access to resources.

Actionable Steps to Prepare Your Organization

You can start preparing for this infrastructure shift today.

- Assess Workload Profiles: Inventory your organization’s training, fine-tuning, and inference demands. Estimate required GPU hours, memory footprint, and interconnect needs.

- Choose an Adoption Path: Evaluate your options based on control and utilization. Consider on-prem AI supercomputing platforms (like DGX SuperPOD class) for sovereign control. Alternatively, look at national facilities (NAIRR pilot, EuroHPC calls) for shared, scaled access.

- Operationalize Safety and Governance: Implement NIST’s testing, evaluation, validation, and verification (TEVV) practices. Use the AI RMF and secure SDLC guidance tailored for generative AI.

- Build Talent and Partnerships: Engage with NSF AI Institutes and EuroHPC training opportunities. Collaborate with national labs and platform vendors to effectively upskill your technical teams.

Final Notes

The global sprint to build AI supercomputing platforms is actively reshaping scientific research and industry. By expertly combining exascale hardware, advanced interconnects, and full-stack software, these platforms enable generative AI at unprecedented scale, while driving fundamental scientific breakthroughs-in health, energy, and climate. Organizations that plan thoughtfully, aligning with the NIST risk frameworks, national strategies, and shared access initiatives, will transform cutting-edge compute power into trusted, transformative outcomes.

FAQs

They offer much tighter integration of compute, memory, and interconnects (NVLink/InfiniBand). They feature rack-scale liquid cooling and a full-stack orchestration system. These systems are specifically designed for the extreme-scale training and inference that standard commodity clusters cannot handle.

Yes, they can. Programs like the NSF’s NAIRR pilot and EuroHPC AI & Data-Intensive calls provide compute allocations. This democratizes access to high-end AI supercomputing platforms for researchers, startups, and SMEs.

You must adopt NIST’s AI RMF Generative AI Profile and their secure development practices (SP 800-218A). You should also participate in NIST GenAI evaluations to measure the model’s reliability, safety, and robustness before deployment.