Smart Government

The future has arrived; indeed, your city’s new manager is an algorithm. Consequently, we now have a “smart government,” and the city’s A.I. handles all the decision-making. Specifically, it directs public services and manages infrastructure. This new boss is never tired, never asks for a raise, and crunches data instantly and makes decisions without bias. Sounds like a dream, doesn’t it?

However, who is accountable when it messes up? After all, this isn’t science fiction anymore. For instance, cities are currently using AI to manage traffic flow and for crime prediction. Algorithms manage public resources, and as a result, we are promoting A.I. to key roles. Therefore, this promotion warrants a serious discussion. Ultimately, what happens when the A.I. fails? We must discuss this crucial issue of accountability.

The Rise of the Algorithmic City

Let’s think about a traditional city for a moment. Bureaucrats make decisions, and city councils and planning boards handle human processes. These processes are often slow and imperfect.

Now, on the other hand, imagine a smart government. Here, an A.I. analyzes traffic patterns and dynamically adjusts streetlights. It also predicts where potholes will form and instantly schedules repairs. This brings a huge gain in efficiency, consequently making our lives much easier.

Consider public transportation, for example. An A.I. can optimize bus routes by using real-time ridership data. Furthermore, it predicts peak demand times and adjusts the schedule accordingly. This results in shorter wait times and less fuel consumption. Ultimately, this benefits both the environment and citizens. This is the great promise of smart government. However, these systems aren’t perfect, and therefore, they can experience major failures.

The Accountability Crisis

So, who takes responsibility? When a human makes a mistake, we know who to blame. For example, a city manager makes a bad call. We can consequently hold them accountable, vote them out, or even sue the city. But what about an A.I.? Where does the buck stop then?

Imagine, for instance, an A.I. managing the power grid. A major heatwave strikes, and the A.I. miscalculates the load, which in turn causes a city-wide blackout. This affects hospitals and homes with vital medical equipment, and as a result, people get hurt. Therefore, who is responsible? Is it the city, the company that built the A.I., the programmer, or the data scientist? Clearly, the chain of command gets very murky.

Ultimately, the A.I. is just a tool built on code and trained on data. The programmer wrote the code; the data scientist curated the data; and the city council approved the system. Consequently, many people are involved, and each person only contributes a small part of the whole. No one person feels completely responsible. This diffusion of responsibility is dangerous and can ultimately lead to a lack of oversight.

Bias and Unintended Consequences

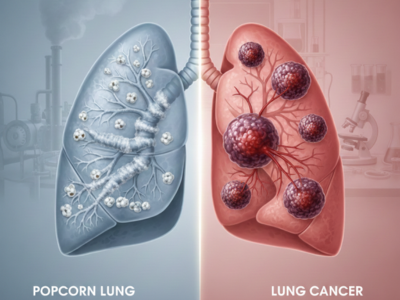

Algorithms are not truly neutral. They are trained on historical data. This data reflects past human biases. For example, a crime prediction algorithm might be biased. It might suggest more police presence in certain neighborhoods. This could be due to past policing patterns. The A.I. just learns from the data. It amplifies existing inequalities. It creates a self-fulfilling prophecy.

This is a major ethical issue. A smart government should serve everyone. It should not perpetuate inequality. How can we ensure fairness? We need a system of checks and balances to audit these algorithms, look for bias, and test for fairness. This requires human oversight. It requires public input.

Furthermore, A.I. can have unintended consequences. An A.I. might optimize a traffic system. It could make the main roads faster. But it might divert traffic through quiet residential areas. This increases noise pollution. It creates a safety risk for children. The A.I. only saw one goal. It was to reduce travel time. It didn’t account for these other factors. This shows the limitations of A.I.

The Legal and Moral Quagmire

Who do you sue when an A.I. fails? Furthermore, what legal precedent even exists? Our legal system isn’t ready for this. We need to create new laws and new legal frameworks, and finally, we must decide liability. Consequently, a popular debate has emerged: should an AI have legal personhood? Some argue it’s just a machine, while others contend it makes complex decisions, making it a new kind of entity altogether.

Meanwhile, moral accountability presents a different challenge. A human decision-maker can feel guilt, learn from their mistakes, and make amends. An A.I., however, does not feel or learn morally; it simply executes code. Ultimately, this removes the essential human element, which can make governance feel impersonal and erode public trust. Therefore, a smart government must be transparent, and its people must understand how it works.

The Way Forward: Hybrid Governance

We can’t just stop progress. A.I. offers great benefits. It can make our cities better. We need a better model. We need a hybrid model. A human-A.I. partnership.

Here is what a good hybrid model looks like:

- Human Oversight: Humans must make the final decisions. The A.I. provides the data. It offers the recommendations. The human reviews the data. The human approves the action. This retains human accountability. This retains human judgment.

- Transparent Algorithms: The A.I. must be auditable. The code should be open-source. The data must be accessible. This allows for public scrutiny. This builds trust. People can see how decisions are made. This is key for a smart government.

- Ethical Frameworks: We need clear ethical guidelines. We need them for A.I. in governance. These frameworks should prioritize public safety. They must ensure fairness. They should protect privacy.

- Defined Accountability: We must create clear rules. We need to define legal liability. The law must specify who is responsible. Is it the vendor? Is it the city official? Is it a new oversight board? The rules must be clear.

The Ultimate Promotion

An A.I. should not get the ultimate promotion; therefore, it should not be our city’s manager. At least, not yet. Instead, it should be a trusted advisor, a powerful tool that assists human leaders. A true smart government uses A.I. to empower humans, not to replace them.

Consequently, we need to start this conversation now, before an A.I. makes a mistake or an algorithm causes real harm. First and foremost, we need to decide who is responsible. Ultimately, the future of our cities and our democracy depends on this decision. We must ensure that A.I. serves us and that we remain in charge. This is the ultimate test. Will we be smart enough to govern our smart government?